By: Theodore Lindsey (Cloud Infrastructure Team Lead)

Hello!

I’m Theo, the Cloud Infrastructure Team Lead here at Project Reclass! Today, I’d like to discuss how we reduce and keep prices under control concerning our AWS Infrastructure. Many of you who work in the cloud are aware of its many benefits: one of the biggest being the low upfront cost. However, the costs can sneak up on you! If you’re looking for the secret to affordable cloud infrastructure, you just might find it here.

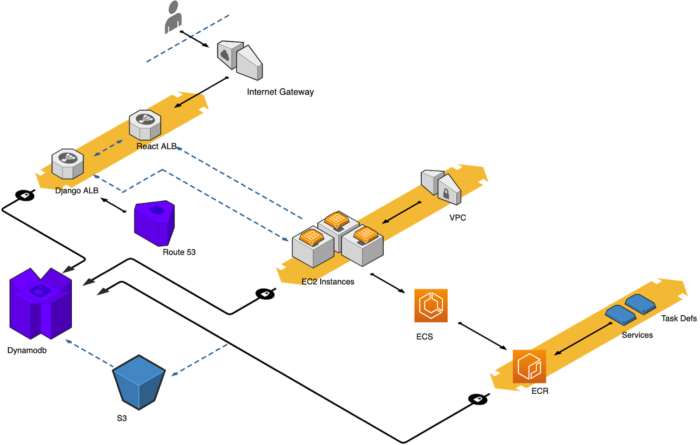

Originally, ToyNet was deployed in Docker containers utilizing Amazon’s Elastic Container Registry (ECR) and Elastic Container Service (ECS). This allowed us to quickly make updates to ToyNet directly from our GitHub repository. By connecting GitHub Actions to ECR and ECS we were able to make updates to the product seamlessly. Furthermore, we deployed our resources utilizing Terraform while storing our state in Amazon’s Simple Storage Service (or an S3 bucket). Storing in an S3 bucket gave our team the ability to make changes to the infrastructure as needed without causing any misconfigurations caused by different team members pushing different changes. Having the remote state in S3 allowed the entire team to reference the same state and prevented changes from happening simultaneously through state locking. Finally, we utilized load balancers to separate the frontend from the backend, as well as serve the frontend to the end user. Below is a high-level visualization of the original infrastructure.

Being a non-profit, Project Reclass received two thousand dollars of AWS credit in 2020; however, we came dangerously close to exhausting it all. And furthermore, we were putting an undue financial burden on our CTO to pay for these costs associated with her personal AWS account where all of our infrastructure was hosted at the time.

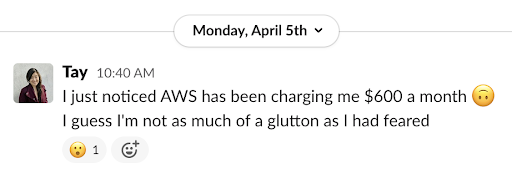

This is definitely not the message you want to see from your CTO — especially four months into the year! To make matters worse, this meant we’d run out of our AWS grants in fewer than four months! Cue the resulting wow react emoji. So, you may be wondering how we got to the point where we’re spending six hundred dollars per month? How can a few servers and a couple of load balancers equate to so much money leaving our organization per month? Well, the answer is a bit complex; but in short, we didn’t have billing alerts set up, and we spun up a lot more resources than we needed. And while that ultimately was our downfall, this revelation didn’t put money back into Tay’s account, nor did it solve our quickly dissolving AWS grant. It was time to spring into action!

The first obstacle was identifying the cost of each of our resources. Unfortunately, I didn’t have access to the billing statements, nor did I have any metrics to go by: I was flying blind. But I knew it was up to me — after all, it was my job! So, I took everything I knew about the infrastructure and cross-referenced the resources we had with the prices that AWS stated on its official cost site. From there, I discovered that resources like EC2 instances can vary greatly in price simply based on the region. And so, I took action and removed resources in every environment that was not Ohio. Ohio is the cheapest region by far, and moving our production deployment from Oregon to Ohio cut our costs in half! Furthermore, eliminating our development and test environments would naturally cut costs by two-thirds — this change alone reduced our approximately $600 bill down to around $200 per month.

We also had some runaway resources; by this, I mean medium and large instances running but not hosting anything, Elastic IPs not associated with any instances, and storage being kept but not attached to anything. All of these had to be eliminated. And so they were, saving us roughly one-hundred dollars per month. But I wanted to take things slow and see what exactly resulted in reduced costs and what didn’t. Prior to reviewing the documentation, I assumed the Elastic Services cost us the most. The key I found was to start small — and it worked. By utilizing the AWS cost calculator, I was able to price out each of our resources and begin eliminating those I felt we could live without. Some of the additional resources I removed from the terraform configuration were: redundant security groups, excess regions, and availability zones, and I also chose to manually configure Route53 DNS records post-deployment.

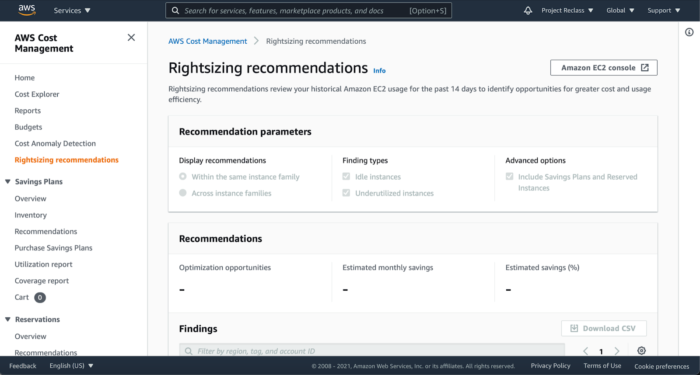

Eventually, after those small changes and downsizing our EC2 instances to nano size, we began to rein in costs. But we were also preparing to migrate to a new AWS account since it was unsustainable to host on Tay’s personal account, so we needed to migrate to a Project Reclass account immediately. It was imperative to understand how to avoid this in the future: we needed metrics, visibility, and close management of our resources. We’re certainly providing the Cloud Team Lead with billing access, and furthermore, we’re implementing billing alerts and some of the other cool AWS cost management tools such as reports, budgets, and rightsizing recommendations that will allow us to both keep an eye on the price of our infrastructure as well as get alerts when we create something that doesn’t fit our budget. An additional benefit is that we no longer have to make guesses at cost estimation ourselves, and instead, we’re able to get accurate and definitive data from AWS.

While the actual resource migration is another blog’s worth of content, it was imperative to truly understand the cost of the infrastructure prior to migrating it, since this would help us predict and manage future costs as our infrastructure changes. All of our savings were estimates at best; we still didn’t understand the true cost of the infrastructure. While we knew the official projectreclass.org website only cost us the price of one micro EC2 instance — roughly $5 for the micro instance — we needed to unveil how much the approximately 50 resources that comprise ToyNet cost. Enter: terraform cost estimator. It’s no secret that our ToyNet infrastructure is built entirely with Terraform, as it requires many resources, security groups, and rules. As a result of automating our infrastructure, we could also predict its cost with the cost estimator whenever we ran a `terraform plan` that outputs to a JSON file — the preferred file format for the cost estimator. In our case, I took the state stored in S3 to get the real-time cost of the deployed production infrastructure.

In the time it took to migrate to the new Project Reclass AWS account and write this blog, AWS reduced the nonprofit grant by half! Instead of two thousand dollars per year, we now have one thousand. As a result, the above infrastructure became unsustainable in conjunction with the upgrades and additions we have planned for the infrastructure. As such, I took a hard look at the original configuration and decided that there was no room to downsize as configured, and we were already at the bare minimum for that configuration. We had even cut resources down from 50 to 45. These five resources were Route53 DNS configurations, security groups, and redundant regions unnecessary for the bare minimum deployment I was attempting to achieve. However, the remaining 45 were indispensable for the original configuration of the deployment. As such, a new configuration had to be designed, which cut out ECR and ECS, reduced our load balancers and EC2 instances, and now ToyNet is hosted on a single EC2 instance and utilizes one load balancer. Ultimately, this cut down hosting costs from the above figure of approximately 115 dollars per month to around 30 dollars per month.

So, now you know how we ended up paying far more for our infrastructure than we needed to. And you may have learned a few tricks to keep prices down for your organization, a few small changes proved to have massive impacts on your overall costs. With our new configuration, we are still able to automate ToyNet deployment and quickly spin up and down instances for development and testing as needed. We did, however, need to move several resources originally hosted on AWS, such as our terraform state and Docker images, to other areas such as terraform cloud and Docker Hub. Moving forward, we’ll be implementing tools such as billing alerts to ensure we don’t exceed a certain amount per day and only scale up when we absolutely need to. Stay tuned to learn about how we migrated all of this costly infrastructure away from our CTOs personal account to an AWS account owned by our actual organization.

The Author

Theodore Lindsey is a fun-loving cloud professional in the U.S. Air Force. He stays active, spends quality time with family and dogs, and fights for education in low-income areas. He wants to provide free education to underrepresented youth.